Sawyer Mobile Device Interaction

For my final project in my Advanced Robotics class, I proposed a method of enabling the collaborative arm robot Sawyer (by Rethink Robotics) to perform single and multi-touch gestures on a mobile device. The purpose of this project is twofold. One is to allow for automated smartphone and mobile application testing through cobots. The other purpose is to eventually allow for cobot teleoperation through human biological signals, such as EMG or gaze, so that people with mobility-limiting disabilities may fully control touch screen devices without use of their arms.

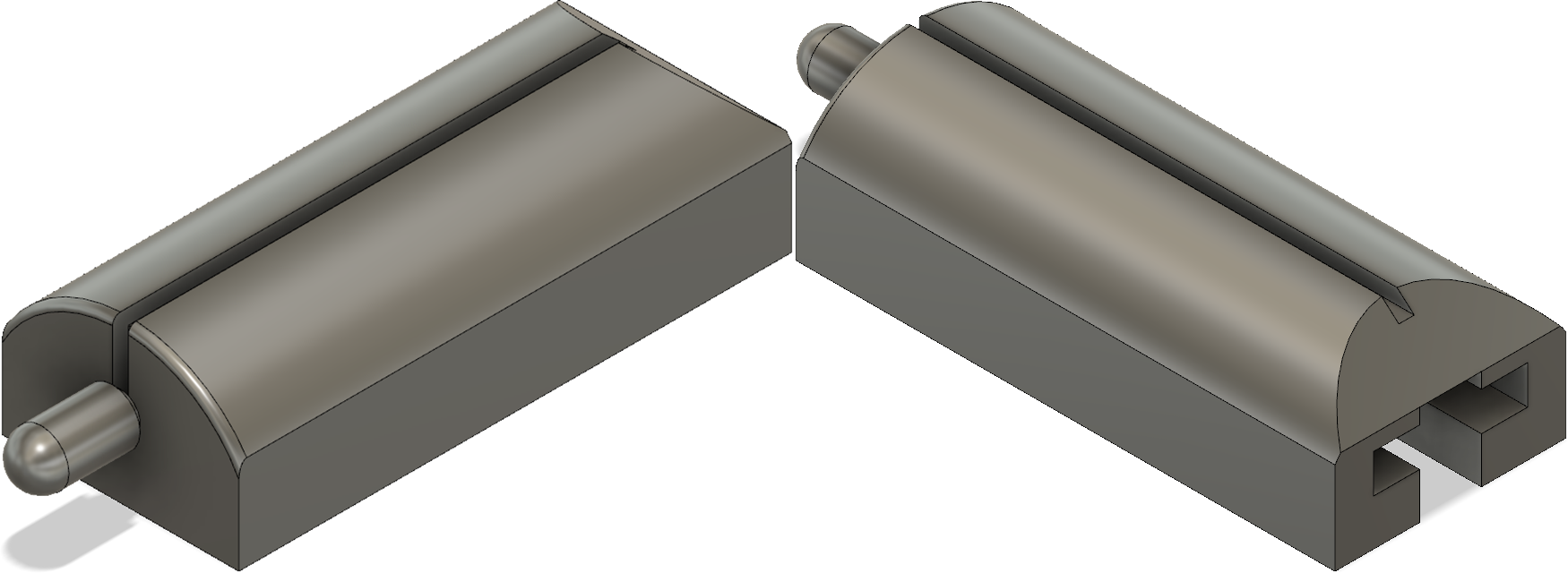

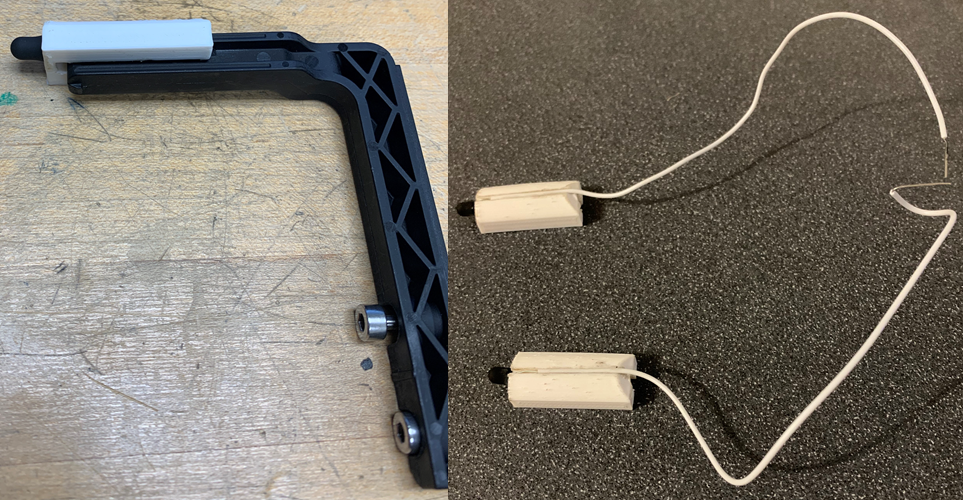

I began by using Autodesk Fusion 360 to design new “fingers” to attach to Sawyer’s electric parallel gripper. These fingers were designed with the additions of capacitive stylus pen tips and grounding wires in mind.

Finger CAD Model

Finger Assembly

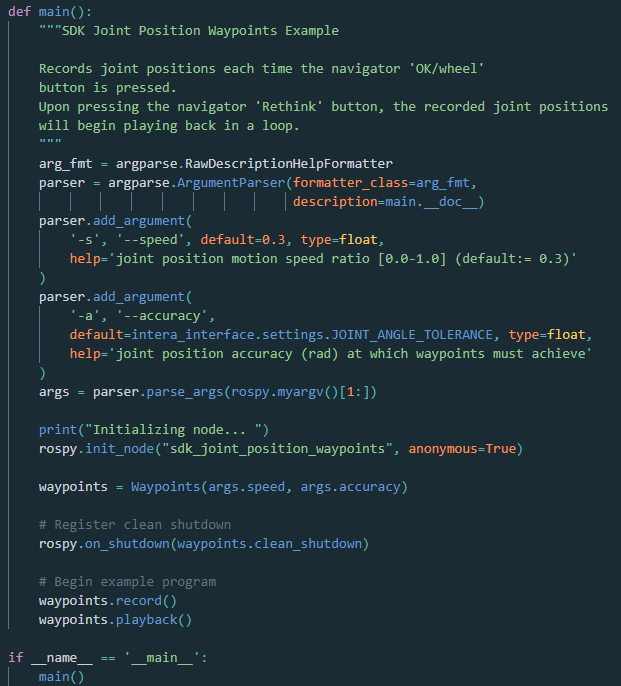

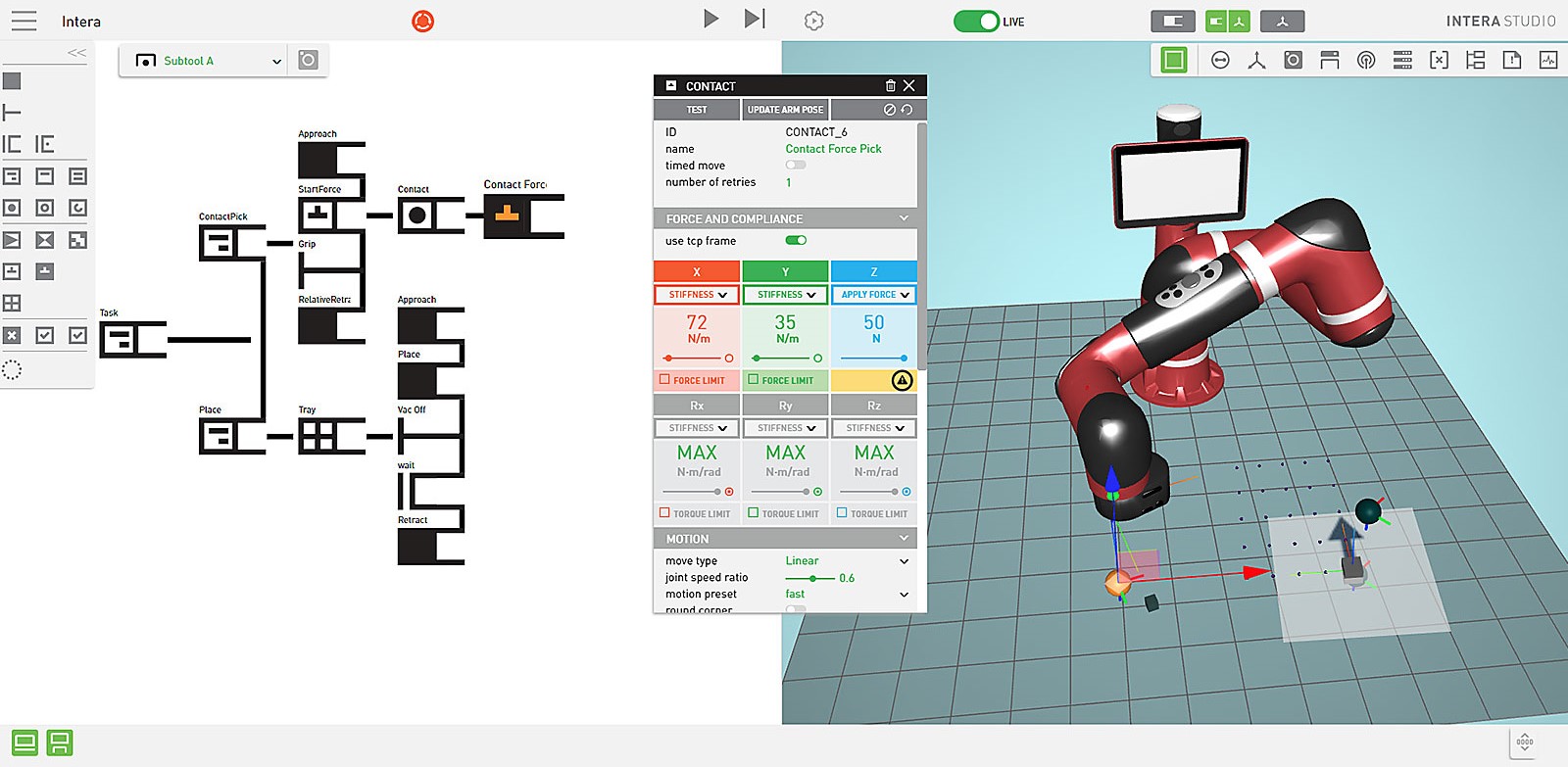

After booting Sawyer in SDK Mode, communication between Sawyer and my workstation was established using a LAN connection and Robot Operating System (ROS). Using the intera_interface library and documentation provided by Rethink Robotics, I was able to read and write the arm robot’s endpoint frame (position and orientation), joint angles, and force applied at the endpoint. Thus, I was also successful in creating custom Python scripts for Sawyer to perform tap, swipe, and pinch gestures. A snippet of Python code for controlling Sawyer with the intera_interface library is shown below.

Code Snippet

Intera Software Platform

More information about this project is available on GitHub.