Gesture Controlled Drone Simulation

I worked in a team with two of my fellow students on a final project for our Biorobotics/Cybernetics class. The goals of the project were to create a machine learning model capable of accurately classifying a predetermined set of hand and arm gestures based on a user’s biosignals, and to use said model to control a quadcopter drone’s trajectory in a real time simulation environment.

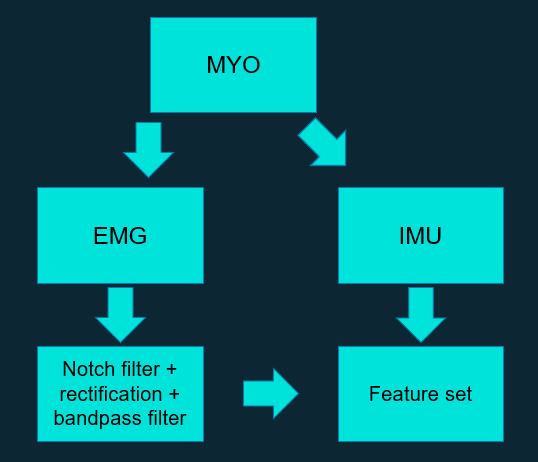

We began this project by agreeing on a set of fifteen hand/arm gestures that map to commands for the drone to move and rotate along all axes in 3D space. Electromyography (EMG) and accelerometer/gyroscope (IMU) signals were collected using a Myo Armband while each of us peformed these gestures; a total of 300 samples were collected.

Myo Gesture

Preprocessing of the collected data was necessary since these biosignals are extremely small in voltage and susceptible to noise. Band pass and notch filters were applied to the EMG signals, as well as rectification and a zero mean operation. A rolling average was applied to the IMU signals as a smoothing technique.

Preprocessing

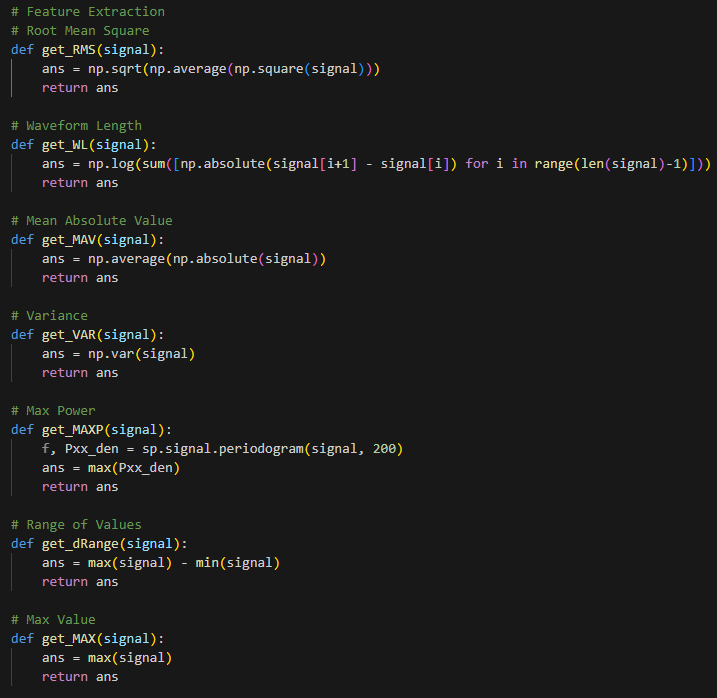

After conducting a literature survey, our team agreed upon a set of straightforward metrics used to characterize each hand/arm gesture, such as mean absolute value, variance and max power. These features were calculated for each channel of each sample before feeding them into machine learning methods of our choice.

Feature Extraction

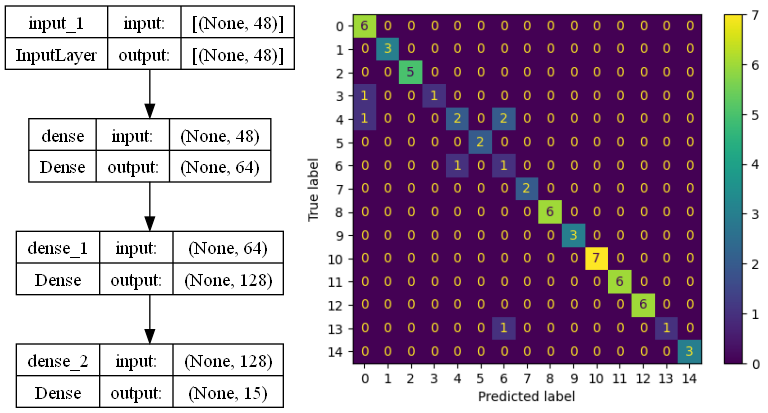

Team research concluded that neural networks (NN), support vector machines (SVM), and long short-term memory (LSTM) networks have shown promising results when used to classify EMG data. Each of us trained one of these machine learning methods on our collected EMG data, and my neural network showed the highest accuracy when classifying unobserved samples (about 90% accuracy). A visual of the original NN’s structure and resulting confusion matrix are shown below.

EMG Neural Network

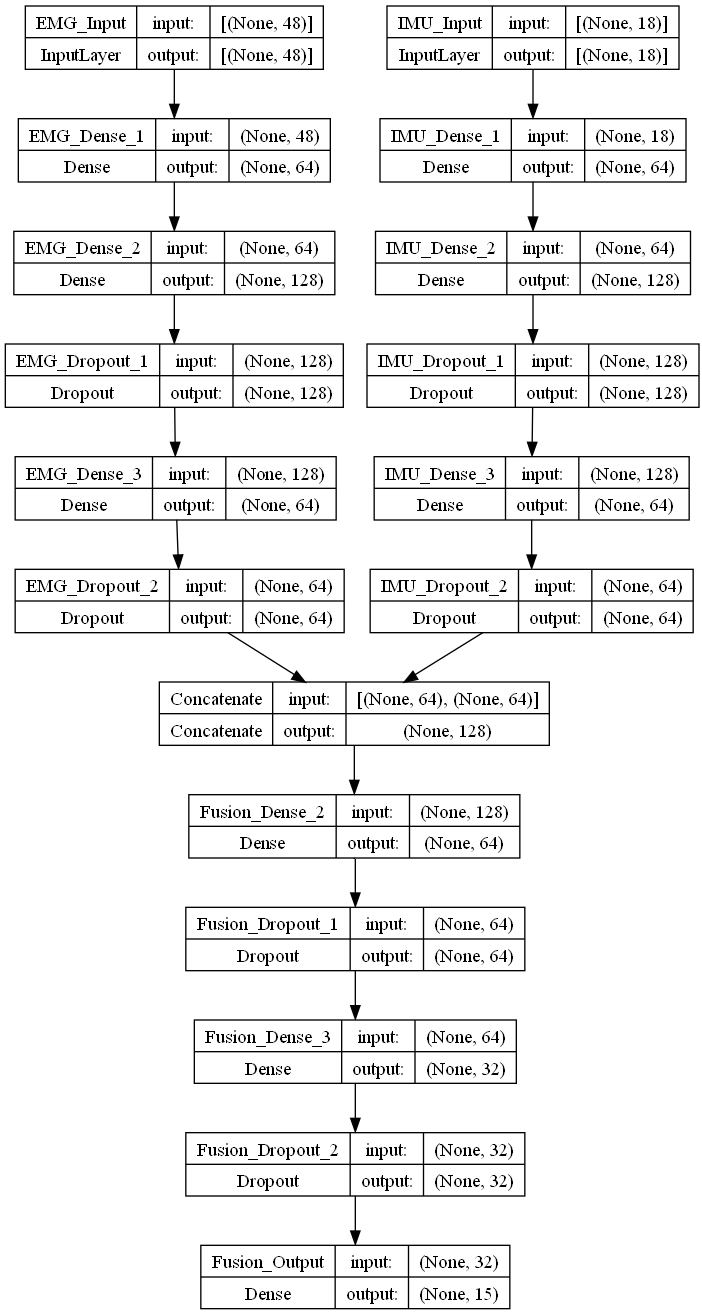

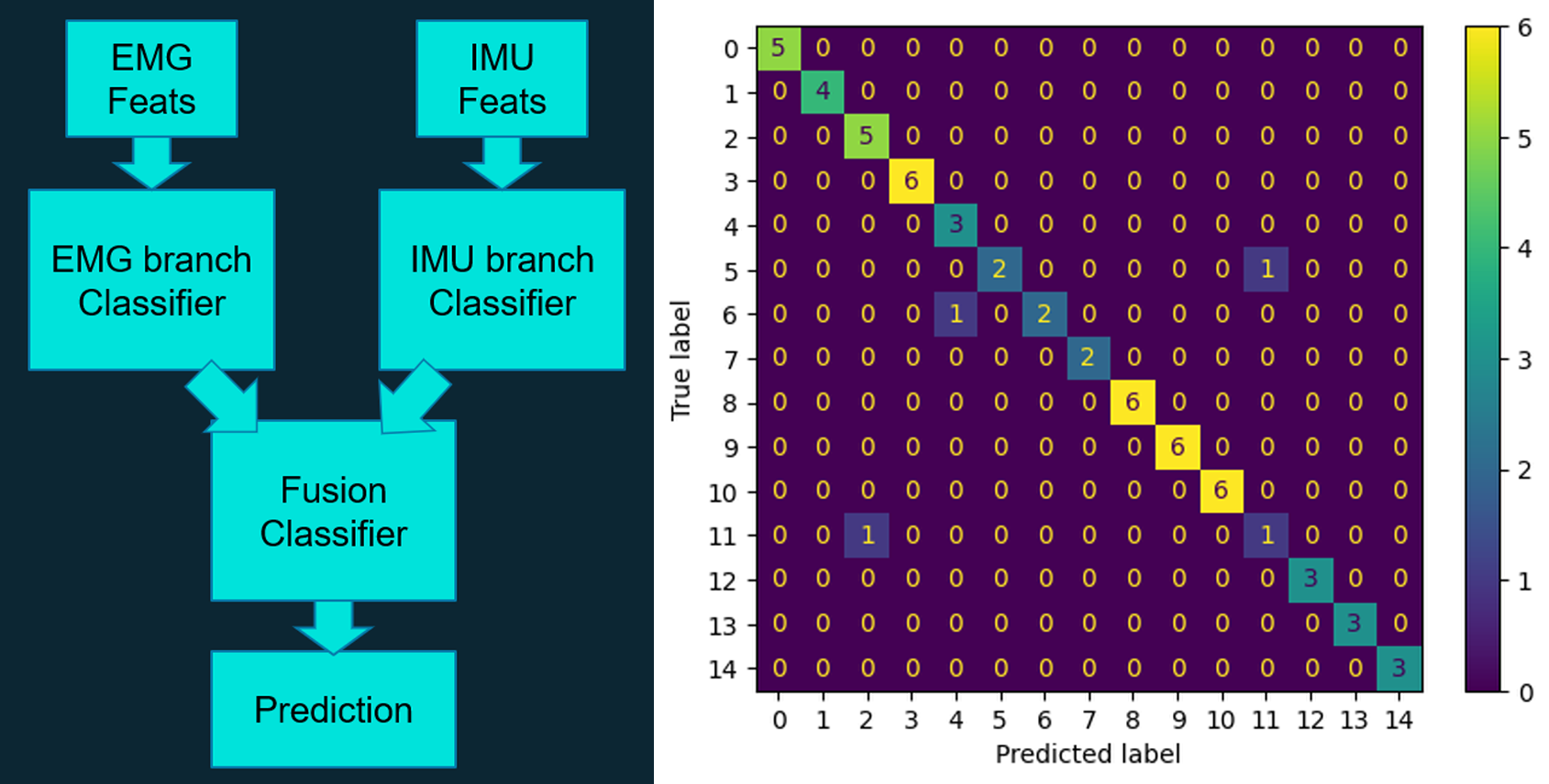

In order to make our team’s gesture classification method novel, we decided to modify my neural network to include data fusion between EMG features and IMU features. The final neural network consisted of an EMG branch classifier, an IMU branch classifier, and a fusion classifier that concatenates the learned features from each data type. This resulted in a tested gesture classification accuracy of 95%. See visuals of the final network’s structure and resulting confusion matrix below.

Fusion Neural Network Structure

Fusion Neural Network

After finalizing the machine learning portion of the project, it was time to apply it to a drone simulation environment. The open source flight simulator Flightmare was chosen due to its ability to interface with the Robot Operating System (ROS), a system the team was already familiar with. A Python script was created that imports the structure of the trained fusion network, uses the ros_myo package to continuously import live gesture data into a buffer, classifies the incoming gesture data, and publishes its prediction to a ROS topic named /COMMAND. A C++ script was also created that opens a ROS-enabled drone simulation environment, subscribes to the /COMMAND ROS topic, and moves the drone in the direction associated with the fusion network’s prediction.

More information about this project is available on GitHub.